When large language models (LLMs) first became accessible, most of our interactions with them were bound within a single prompt-response cycle. You asked, they answered. But as developers began embedding AI into real systems (IDE copilot etc.), it became clear that prompts alone couldn’t sustain meaningful workflows. AI needed context, memory, and the ability to act, not just chat. That’s where the Model Context Protocol (MCP) enters the picture (to solve the context and ability needs).

At its core, MCP is an open standard that lets AI models connect to external systems in a structured, context-aware way. Think of it as the connective tissue between an AI and the tools it depends on; databases, project trackers, and code environments. Rather than reinventing integrations for each tool, MCP solves the integration bottleneck for agentic systems and enables real-time, context-aware automation.

Why Not Just Call APIs Directly?

Why not let the model talk directly to the tool’s API?

The short answer is control and security.

MCP defines a client-server pattern that allows AI systems to interact with real-world applications through a common interface. This allows models to securely call external tools, fetch structured data, and perform actions without the LLM needing to know every detail about the API behind it. It standardizes how models “see” tools, what they can access, and how they act to keep everything modular, secure, and interoperable.

How it Works

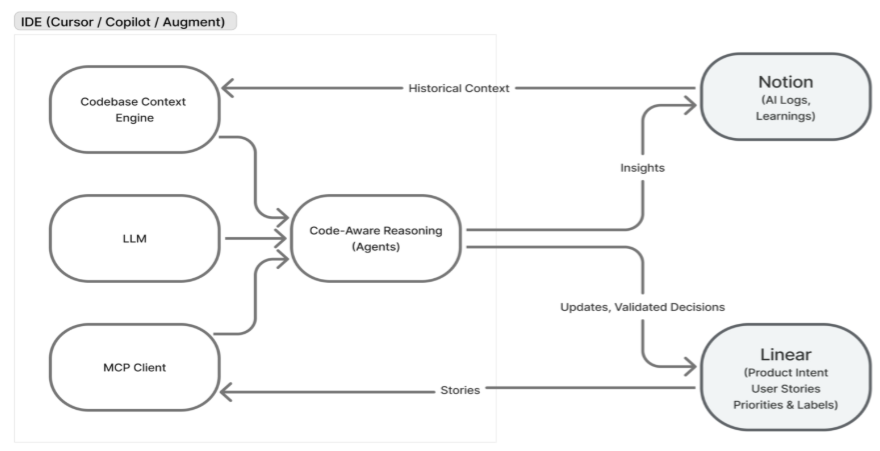

In a typical MCP architecture, an LLM communicates through an MCP client, which routes requests to one or more MCP servers. The client handles translation between the model’s natural language intent and the technical request schema, while the server executes the actual tool actions like storing data, fetching content, or performing updates. Some IDE environments, such as Cursor, already act as an MCP client under the hood, enabling seamless communication with compatible servers. This design separates the language model from the tool’s raw APIs.

Our Workflow: IDE Centered Intelligence with MCP

At Creospan, we deliberately designed our MCP based workflow around a simple but important belief: meaningful engineering decisions require code-level context. While large language models can reason over user stories and tickets in isolation, real prioritization, dependency analysis, and implementation planning only become reliable when the model understands the actual code, it is going to change. This is precisely the gap MCP helps us bridge.

This is why our workflow places the IDE, not the task tracker or project planning tool, at the center.

At Creopsan, Linear serves as our project management tool. Linear is a high-performance project management tool designed to streamline software development workflows through a minimalist interface. It holds user stories, priorities, and labels. However, instead of treating Linear as the place where decisions are made, we treat it as a structured input source. Through an MCP connection, stories flow from Linear directly into the coding environment, where they can be evaluated with full visibility into the codebase using AI Assisted IDE’s context engine.

Once inside the AI Assisted IDE (Cursor, GitHub Copilot, Augment Code, etc.), the LLM operates with two critical forms of context. The first is project management context, fetched from Linear via MCP. The second is implementation context, derived from the code repository itself using the IDE’S context engine which maintains a live understanding of the stack across repositories, services, and code history.

This combination enables a class of reasoning that is difficult to achieve elsewhere. As stories are loaded into the IDE, the LLM can reason across them to surface overlaps, shared implementation paths, and implicit relationships. Similar stories can be grouped not just based on description but based on the parts of the codebase they affect. Common work emerges naturally when multiple tickets map to the same components or abstractions. Ordering concerns surface by inspecting dependencies in code rather than relying solely on ticket-level links.

Importantly, this reasoning is not fully automated or opaque. The LLM proposes insights and prioritization suggestions, but developers remain in the loop. Engineers validate, adjust, or override decisions with a clear understanding of why a particular ordering or grouping was suggested. MCP makes this possible by ensuring that product intent from Linear and technical reality from the codebase using context engine are available together inside the IDE.

Once decisions are validated, the workflow completes its loop. Updates, refinements, and execution outcomes are pushed back into Linear via MCP, keeping the product view synchronized without forcing developers to leave their editor. Developers can then pick up a story, begin implementation, and update its status directly from the IDE. Every change, discussion, and update stay synchronized, giving stakeholders a live view of progress while preserving developer flow.

Notion as the Learning Layer

If Linear captures what we plan to build, Notion captures how we build it. Notion is an all-in-one workspace that blends note-taking, document collaboration, and database management into a single, highly customizable platform. Through a separate MCP server, we log meaningful AI interactions from the IDE into Notion. This includes prompts that led to better architectural decisions, reasoning traces behind prioritization choices, and patterns that repeat across projects. Over time, these logs have evolved into a knowledge dataset, a reflection of how our team collaborates with AI. By analyzing them, we uncover which prompts drive faster development or cleaner code, and which patterns repeat across projects. The most effective ones become shared templates, enabling the entire team to improve collectively rather than individually.

The result is a connected system where planning, implementation, and learning reinforce each other through shared context. MCP’s value lies not in tool integration itself, but in enabling intelligence to operate within the IDE, where code and product intent converges.

At Creospan, we see this as a key step forward for SDLC productivity, where small efficiencies compound across teams and projects. In the end, our implementation shows how AI systems can evolve from reactive to proactive. Tools like Notion and Linear are not just endpoints; they are contexts. With MCP, we give AI the means to understand, navigate, and contribute to those contexts intelligently.

Conclusion

As AI continues to reshape the landscape of software development, MCP stands out as a transformative standard for building agentic, context-aware workflows. By bridging product intent and technical reality within the IDE, MCP empowers both AI and human collaborators to make informed, reliable decisions driving productivity and innovation across teams. The recent evolution of MCP, with enhanced security, structured tool output, and seamless IDE integrations, positions it not just as a technical solution but as a foundation for the next generation of intelligent engineering systems.

Article Written By Dhairya Bhuta