This article explores three key areas: Generative AI and its patterns, the Retrieval-Augmented Generation (RAG) framework, and AWS’s role in supporting this journey.

What is Generative AI?

Generative AI is a type of artificial intelligence focused on the ability of computers to use models to create content like images, text, code, and synthetic data.

The foundation of Generative AI applications are large language models (LLMs) and foundation models (FMs).

Large Language Models (LLMs) are trained effectively on vast volumes of data and use billions of parameters, Then LLM’s get the ability to generate original output for tasks like completing sentences, translating languages and answering questions.

Foundation models (FMs) are large ML models are pre-trained with the intention that they are to be fine-tuned for more specific language understanding and generation tasks.

Once these models have completed their learning processes, together they generate statistically probable outputs. On prompted (Queried) they can be employed to accomplish various tasks like:

- Image generation based on existing ones or utilizing the style of one image to modify or create a new one.

- Speech oriented tasks such as translation, question/answer generation, and interpretation of the intent or meaning of text.

Generative AI has the following list of design patterns:

- Prompt Engineering: Crafting specialized prompts to guide LLM behavior

- Retrieval Augmented Generation (RAG): Combining an LLM with external knowledge retrieval. Combining best of two capabilities (most recommended).

- Fine-tuning: Adapting a pre-trained LLM to specific data sets of domains. Eg: Specific for Customer service or in Health Care etc.

- Pre-training: Training an LLM from scratch. Needs lot of computing power/time.

Retrieval Augmented Generation (RAG):

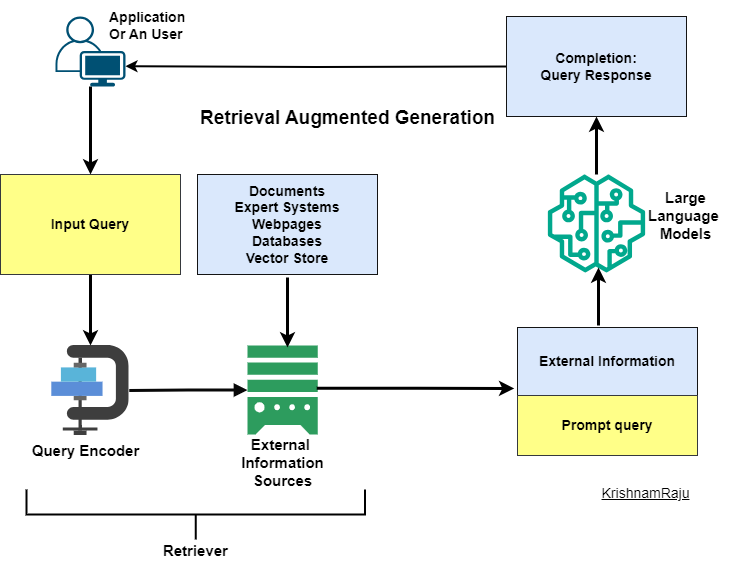

RAG (Retrieval Augmented Generation) is a method to improve LLM response accuracy by giving your LLM access to external data sources.

LLMs are trained on enormous data sets, but they don’t have specific context for your business, industry, or customer specific needs. RAG adds that crucial layer of information for LLMs to make effective closures.

To understand RAG, we need to explore the limitations of LLMs.

Limitations of LLM’s:

- Hallucination: LLM’s try to present false information when it does not have the answer or even there is no answer.

- Outdated Info: Presenting out-of-date or generic information when the user wants a specific, accurate response.

- Tech Confusion: Generating inaccurate responses due to terminology confusion, wherein different training sources use the similar terminology about different things.

- Unauthorized: Creating a response from non-authoritative sources.

RAG works in three stages:

- Retrieval: When a request reaches LLM and the system looks for relevant information that informs the final response. It searches through an external dataset or document collection to find most relevant pieces of information. This dataset could be a curated knowledge base, or any extensive collection of text, images, videos, and audio or even your local database.

- Augmentation: In this step the query is enhanced with the information retrieved in the previous step.

- Generation: The final augmented response or output is generated. Your LLM uses the additional context provided by the augmented input to produce an answer that is not only relevant to the original query but enriched with information from external sources.

Customer service RAG use cases:

Personalized recommendations: Generate personalized product recommendations based on customer’s browsing patterns or past interactions and preferences

Advanced chatbots: RAG empowers chatbots to answer complex questions and provide personalized support to customers – improving customer satisfaction and reducing support costs.

Knowledge base search: Quickly retrieve relevant information from internal knowledge bases to answer customer inquiries faster and more accurately.

AWS had the following ways of support for RAG:

Amazon Bedrock: Is a fully-managed service that offers a choice of high-performing foundation models—along with a broad set of capabilities—to build generative AI applications while simplifying development and maintaining privacy and security. With knowledge bases for Amazon Bedrock, you can connect FMs to your data sources for RAG in just a few clicks. Vector conversions, retrievals, and improved output generation are all handled automatically.

Amazon Kendra: Is for organizations managing their own RAG.A highly-accurate enterprise search service powered by machine learning. It provides an optimized Kendra Retrieve API that you can use with Amazon Kendra’s high-accuracy semantic ranker as an enterprise retriever for your RAG workflows.

Amazon SageMaker: Amazon SageMaker – JumpStart is a ML hub with FMs, built-in algorithms, and prebuilt ML solutions that you can deploy with just a few clicks. You can speed up RAG implementation by referring to existing SageMaker notebooks and code examples.

Article written by Krishnam Raju Bhupathiraju.