120 zettabytes of data is produced each day! This includes the data generated by rovers on Mars to your latest vacation posts on Instagram. Google alone processes a staggering 1.2 trillion searches every year. A couple of weeks ago, Netflix published viewership statistics for over 18,000 titles, watched by 247 million households in the first half of 2023. Each title had a minimum watch time of 50,000 hours. WhatsApp, used in over 180 countries, sees a whopping 140 billion messages exchanged daily by its 2.78 billion monthly users.

Ever wondered how all this data is managed? Our software alchemist –El Mehdi Kordaunpacks the evolution of databases from mere storage units to strategic assets in this article.

The Evolution of Databases: From Storage to Strategic Asset

In the ever-evolving tapestry of today’s software landscape, databases emerge not just as simple repositories, but as the foundational heartbeat powering digital experiences. Central to this transformation has been the role of the Database Management System (DBMS). Early on, traditional database management tools such as SQL databases were the de facto choice, managing data in structured tables. These Database Management Systems (DBMS) played an instrumental role in that era and far beyond its early days as a mere arbitrator of storage, today’s DBMS stands tall as a superior orchestrator of data retrieval, sharing, and safeguarding.

Embracing Modern Data Solutions: NoSQL and the Cloud

As we transitioned from traditional to more dynamic data needs, our data management tools had no choice but to evolve in tandem. Enter the era of NoSQL databases like MongoDB and Cassandra. These weren’t just new tools; they represented a paradigm shift. Agile and robust, they stepped up to the plate, catering to the surging tide of unstructured or semi-structured data birthed by modern applications. And just as we were coming to grips with this change, another revolution was on the horizon: the cloud. Giants like AWS DynamoDB and Google Cloud Firestore didn’t just offer storage – they promised an ensemble of high availability, scalability, and integrative capabilities. These platforms subtly shifted corporate energies, allowing a focus away from infrastructural nuances and more towards core vision and innovation.

Guided by the CAP Theorem: Prioritizing Data Access

Yet, with every innovation comes a new set of choices, and the CAP theorem emerged as the guiding star in this dynamic realm of databases. At its core, it posed introspective questions: Do we lean towards Consistency and Availability, or should we pivot towards Availability coupled with Partition Tolerance? For instance, a social media platform may prioritize Availability to ensure users always access their feed, even at the cost of momentary inconsistency in seeing the latest posts. In contrast, a banking system might favor Consistency to ensure every transaction is accurately recorded, even if it means temporary unavailability during network disruptions. These real-world applications of the CAP theorem illustrate its significance beyond theory, impacting how we interact with technology daily.

As organizations grappled with these questions, the real-world implications became clear. In an age where everything is interconnected, even a minor network disruption can have magnified impacts, making the CAP theorem not just a theoretical concept, but a real-world decision-making tool.

Cloud vs. On-Premise: The Deployment Dilemma

But the journey of database selection has layers. Beyond just the model or type, there looms the larger question of deployment. Do we soar with the cloud, embracing its promise of scalability and operational efficiency? Or do we stay grounded in on-prem solutions, valuing the control and security it offers? The allure of cloud databases is undeniable with their operational agility, but they also add a recurring cost column to the ledger. On the flip side, on-premises options might demand a hefty initial outlay and periodic maintenance but in return offer an unparalleled fortress of control and security.

Database Performance Evaluation Metrics

When evaluating database performance for software applications in healthcare, insurance, and finance, metrics play a crucial role. Key performance indicators (KPIs) such as query response time, transaction throughput, and resource utilization (CPU, memory, disk I/O) are vital. In healthcare, with its stringent data privacy requirements, security breach response times and encryption strength are also pivotal. In insurance and finance, where real-time data processing is key, look at metrics like transaction processing speed and uptime. Additionally, consider the scalability of the database to handle growing data volumes and user load, particularly in these dynamic industries. Regular monitoring of these metrics will ensure optimal database performance aligned with industry-specific needs.

Here are a few metrics you can use to evaluate the performance of your database:

- Data Latency: Time taken for data to travel from its source to the destination, crucial for real-time applications in finance. In finance, data latency is crucial during high-frequency trading, where milliseconds can impact decisions. High data latency can lead to delays in transaction processing or in receiving crucial market data.

- Indexing Efficiency:Speed and effectiveness of data indexing, impacting search and retrieval times. In healthcare, efficient indexing is vital for quickly accessing patient records. Poor indexing can lead to slow retrieval of vital patient information.

- Cache Hit Ratio: Frequency at which data is served from the cache versus the database, indicating efficiency in data retrieval. In telecom, a high cache hit ratio is essential for quickly serving frequently requested data like user profiles or service plans, enhancing user experience.

- Concurrency Levels:Ability to handle multiple simultaneous transactions, vital for high-traffic industries like finance and insurance. In insurance, high concurrency levels are necessary to handle numerous simultaneous insurance claims during peak times.

- Data Redundancy and Replication: Measures the database’s ability to replicate data across systems for reliability and fault tolerance. In finance, data redundancy ensures that in the event of a system failure, there’s no data loss, which is critical for transaction records.

- Backup and Recovery Times:Speed and efficiency of data backup and recovery processes. In healthcare, efficient backup and recovery are crucial for patient data, especially in emergency situations where data availability can be life-saving.

- Database Connection Times:Time taken to establish connections with the database, impacting application response times. In online retail (a subset of telecom), fast database connection times are essential for handling customer transactions without delays.

- Data Integrity and Consistency: Ensuring data remains accurate and consistent across the database, especially critical in healthcare and finance. In finance, ensuring data integrity is vital for accurate financial reporting and compliance with regulations.

- Read/Write Throughput: The volume of data reads and writes the database can handle, indicating overall performance. In telecom, high read/write throughput is necessary to handle vast amounts of data generated by users and IoT devices.

Selecting and evaluating a database for your software application requires a comprehensive approach. This involves considering a framework that ensures both technical and operational resilience. Such a framework should encompass a variety of factors, including database performance, scalability, security, and compatibility with existing systems, to ensure the chosen database aligns well with your application’s needs and objectives.

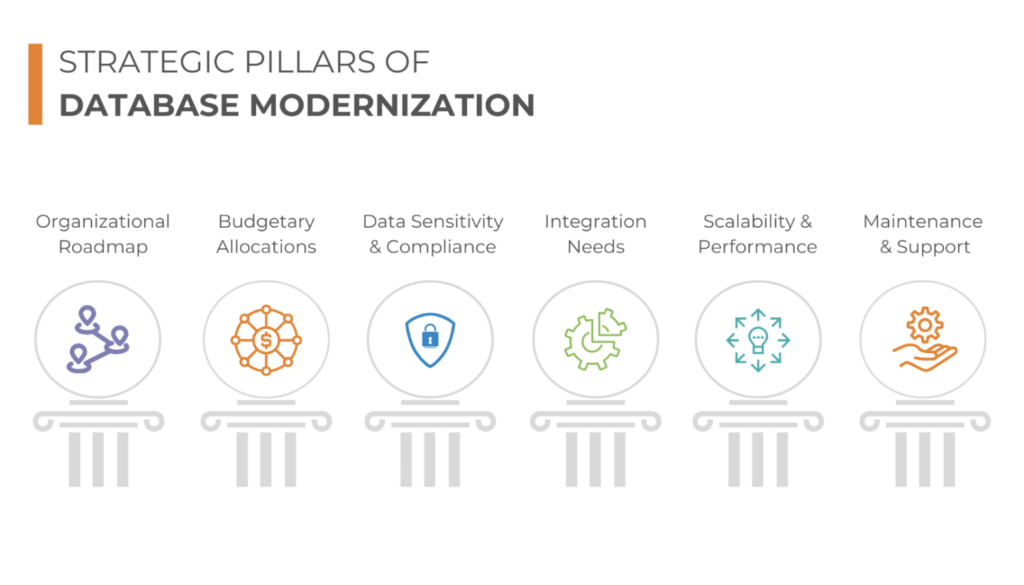

Strategic Pillars of Database Modernization

As organizations embark on the comprehensive journey of database modernization, it’s imperative to navigate the process with a clear strategic framework. The following considerations serve as essential touchpoints to ensure a successful transition:

- Organizational Roadmap: It’s essential to envision the organization’s trajectory over the next decade. What are the anticipated changes in the data ecosystem, and how will they align with the broader strategic goals?

- Budgetary Allocations: Modernization comes with both immediate and long-term financial implications. Organizations need to evaluate whether they are prepared for recurring expenses or if a one-time significant investment resonates more with their fiscal strategies.

- Data Sensitivity and Compliance: Modern databases are not just about storing information; they’re about safeguarding it. Organizations must assess the various compliance and regulatory parameters that influence their decision, ensuring that data protection remains paramount. Specific security features like encryption, access controls, and audit logs should be a key consideration.

- Integration Needs: Will the new system play well with existing tools or are custom solutions the need of the hour? In an interconnected tech landscape, the new database system should seamlessly integrate with existing infrastructures. This includes compatibility with existing data formats and the availability of migration tools or support.

- Scalability and Performance: Can the new database dynamically adjust to peak demands and quiet lulls? The ideal database solution should be adept at handling fluctuating demands. Whether it’s accommodating spikes in traffic or managing quieter periods, adaptability in performance is crucial

- Maintenance and Support: What does the support landscape look like? Is help just a call away or a community forum? A robust support mechanism is pivotal for smooth operations. Organizations must ascertain the availability and quality of support – whether it’s through dedicated helplines, community forums, or other channels.

Steering the Course to Excellence

In stitching these considerations together, one thing becomes clear: databases, in the modern era, are more than mere tools. They’re strategic assets, central to digital transformation. And as we move forward, it’s not just about the choices we make, but the strategy, foresight, and adaptability we bring to the table. If you’re pondering the database maze and could use a helping hand, just drop us a line in the comment section. One of our experts will be delighted to offer you guidance and help illuminate your route to success.

Key Contributor: El Mehdi Korda, Creospan Software Alchemist